The financial industry has been using computer science for algorithmic trading since the 1980s. While it has been successful, traditional algorithms are stuck with two main components: strategy and execution. For algorithmic trading, the scripted agent is limited by the patterns programmed by humans. Since the AI agent can process more data, it is possible for a neural network to identify new patterns in finance.

An interesting study in 2019 showed that 92% of all trades in the Forex markets are done by algorithmic trading bots.* Well by the end of this paper there’s going to be one more.

Reinforcement Learning allows our deep AI agents to be more flexible than scripted bots. Through techniques like Q-Learning and Deep-Q-Learning, our AI agent is able to learn on the go and thus be flexible in the ever-changing financial markets. Using neural networks allows our AI agent to be autonomous.

* Kissell, R. 2020. Algorithmic Trading Methods. Elsevier Science.

OpenAI for algorithmic trading

We used Reinforcement Learning and Q-Learning to create an AI agent to trade an asset. We used a collection of OpenAI Gym environments called AnyTrading. These environments were created for reinforcement learning-based trading algorithms. The stable_baselines imports also helped with RL algorithms. The code used was from the work of Nicholas Renotte from IMB.

The more recent versions of tensorflow and tensorflow-gpu did not have compatible libraries, so we had to use older versions. Running this code on Colab was easier to manage the library imports than using Jupyter Notebook.

The environment needed for QL will be based on the past performance of the market. We will use the OpenAI environment 'stocks-v0' which is purpose-built for these tasks. The StocksEnv is inherited from the TradingEnv. The window size selects the stated amount of previous rows to make the evaluation on. The frame bound sets the amount of data to use, which needs to start from the same integer as the window size. The more data used, the better decision making the AI agent will have.

Our action space is limited to two discrete actions. To buy or to sell. The state observes all the data points from the environment that our AI agent needs to make a decision with.

Choosing the right algorithms for algorithmic trading

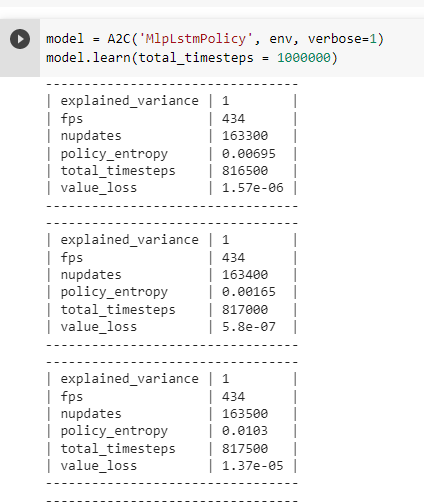

We trained our model using an Actor-Critic Method called A2C. The Q-value is approximated by parameterizing the Q-function with a Long Short-Term Memory(LSMT) neural network. We choose the total timesteps to iterate our experiment that many times, in order to train the model. The closer the explained variance is to one, the more efficient our AI agent is. Ideally, we would like our value loss to be as close to zero as possible. We found that 100,000 interactions were just as effective as one million iterations.

Evaluating our model

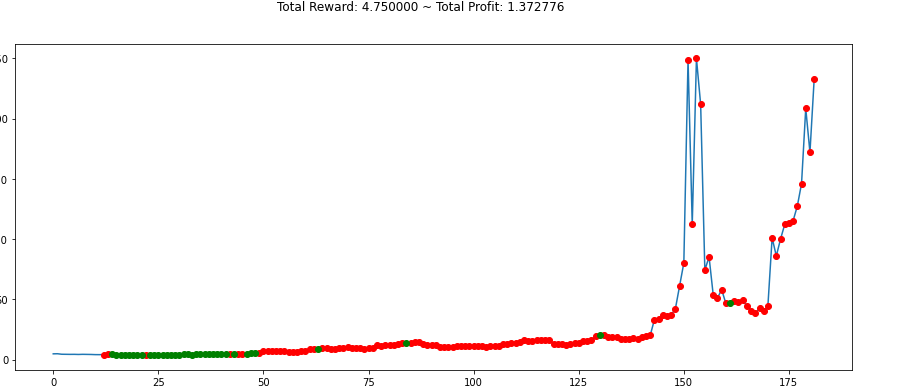

The red and green dots in this graph represent the two different positions for trading. Red means the AI agent wants to 'short' on the price and sells the asset. The green means the bot wants to go 'long' and buy the asset.

Our tests show a 37% profit over 170 days. This is good enough for the production stage.

Our AI agent outperformed an agent that was trading randomly. Our model is not perfect and should perform better with a larger dataset. Due to the modular design of the code, it is easy to implement different solutions with different people across the globe. We tried a number of experiments with larger episodes and with a Deep-Q-Network, but they did not get better results. Plus the DQN was more resource-intensive and required a lot more hours to evaluate the model. After a week of experimentation, we can conclude that Deep Reinforcement Learning can be applied to financial trading using Q-Learning and an A2C algorithm.

Conclusion

Reinforcement Learning and Deep-Q-Learning have been effectively used to beat humans in common computer games like chess or Go. AI agents can also enhance the fun in computer games like StarCraft II by giving the AI opponents a human-like feel to their movements. Because AI agents using Deep Learning techniques and neural networks can detect patterns humans cannot, they can give us insights into new gameplay and financial strategies that we may not have considered before.

When we compare Q-learning with Deep-Q-Networks, we find that DQNs can solve more complicated problems but are more resource-intensive than QL. Hence Reinforcement Learning with QL was the best choice for our AI trading bot. According to game theory, using AI to achieve economic or military goals are also considered to be games, just like chess or StarCraft II. This is why psychologist Jordan Peterson says that you should never stop a child from skateboarding, they are training their neural network for something more important.